Amherst College Data* Mammoths

A research and learning group on "everything data" (data mining, knowledge discovery, network science, machine learning, databases, …), led by Prof. Matteo Riondato

Amherst College Data* Mammoths

News Publications Members How to join?

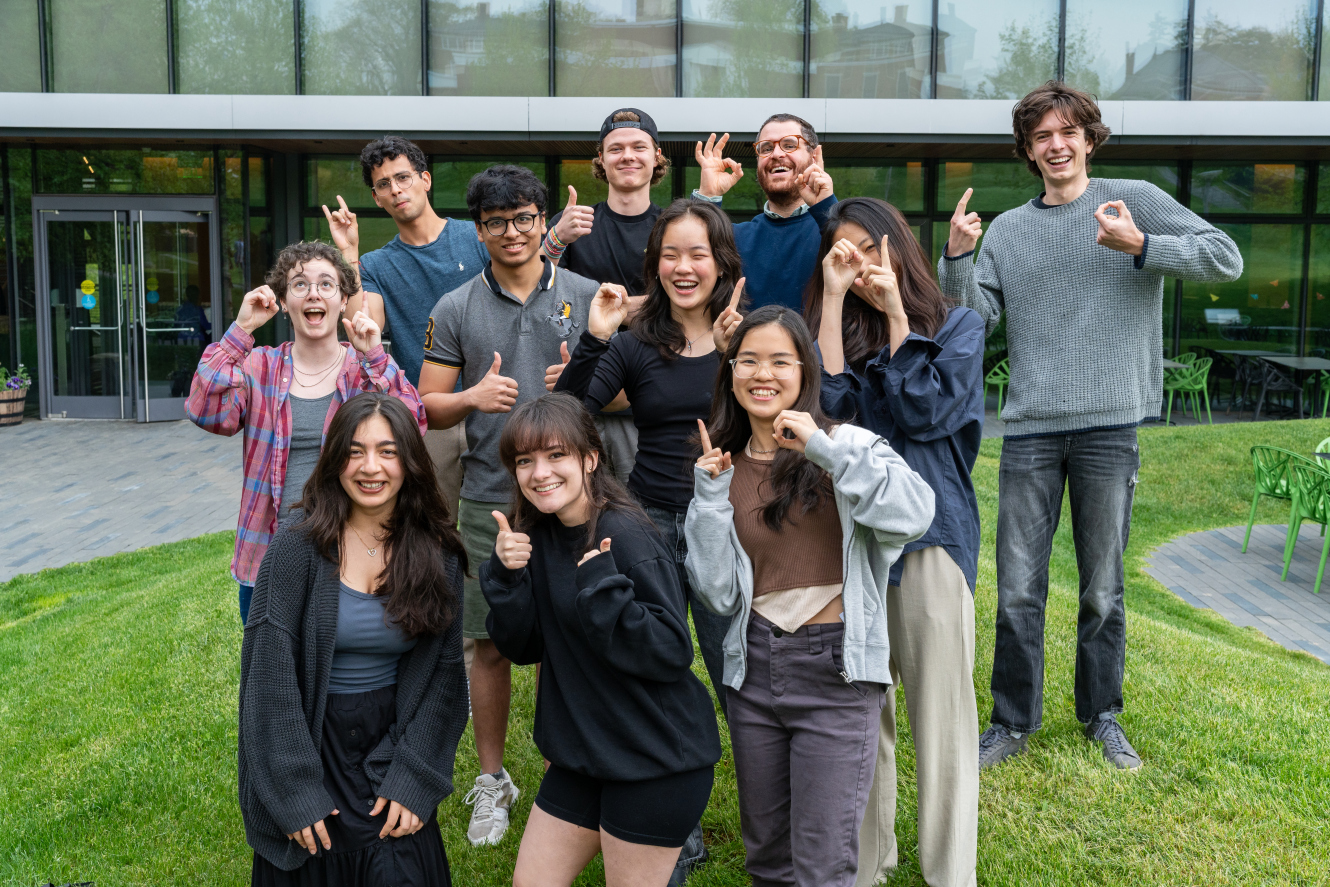

We are a research and learning group led by Prof. Matteo Riondato at Amherst College, mostly in the Computer Science department.

We create and learn about algorithms for “everything data”:1 data mining, network science, machine learning, data science, knowledge discovery, databases, and much more. You can read more about what we do in the Q&A with Matteo for the college website.

The methods we develop often leverage randomness (e.g., sampling, statistical hypothesis testing, sketches) and offer strong guarantees on their performance.

Our research is sponsored, in part, by the National Science Foundation under CAREER award 2238693 and award III-2006765.

When we are not working together at the whiteboard, writing code, or reading papers, you can find us in courses such as COSC-254 Data Mining, COSC-257 Databases, COSC-351 Information Theory, COSC-355 Network Science, or taking an independent study course (COSC-490) with Matteo.

News

-

Maryam was interviewed by the CRA about their experience as a young researcher.

-

Daniel published a version of his honors thesis in AudioMostly’24.

-

Maryam is starting their PhD at Brown CS in September’24, coadvised by Matteo and Eli Upfal.

-

Alex is starting their PhD at Brown CS in September’24, advised by Ugur Cetintemel.

-

Alex and Maryam both received 2024 NSF GRFPs to support 3 years of their PhD studies.

-

Maryam received a honorable mention for the 2024 CRA Outstanding Undergraduate Researcher Award.

-

Stefan was interviewed by the CRA about doing research with the Data Mammoths.

-

Stefan was selected as a finalist for the 2023 CRA Outstanding Undergraduate Researcher Award.

-

The journal version of Bavarian, coauthored by Chloe, was published in ACM TKDD.

Publications with Mammoths student authors

Mammoths student/alumni authors in italics.

-

Daniel Flores-Garcia, Hugo Flores Garcia, and Matteo Riondato. ClaveNet: Generating Afro-Cuban Drum Patterns through Data Augmentation, AM ‘24: Proceedings of the 19th International Audio Mostly Conference: Explorations in Sonic Cultures, 355–361.

-

Maryam Abuissa, Alexander Lee, and Matteo Riondato. ROhAN: Row order agnostic null models for statistically-sound knowledge discovery. Data Mining and Knowledge Discovery (S.I. for ECML PKDD’23). GitHub repo

-

Cyrus Cousins, Chloe Wohlgemuth, and Matteo Riondato. Bavarian: Betweenness Centrality Approximation with Variance-Aware Rademacher Averages. ACM Trans. on Knowledge Discovery from Data, 17(6):78, 2023. GitHub repo

-

Steedman Jenkins, Stefan Walzer-Goldfeld, and Matteo Riondato. SPEck: Mining Statistically-significant Sequential Patterns Efficiently with Exact Sampling, Data Mining and Knowledge Discovery, 36(4):1575–1599, 2022 (S.I. for ECML PKDD’22). GitHub repo

-

Alexander Lee, Stefan Walzer-Goldfeld, Shukry Zablah, and Matteo Riondato. A Scalable Parallel Algorithm for Balanced Sampling. AAAI’22 (student abstract). GitHub repo

-

Cyrus Cousins, Chloe Wohlgemuth, and Matteo Riondato. Bavarian: Betweenness Centrality Approximation with Variance-Aware Rademacher Averages. ACM KDD’21.

Members

- Megan Li’27 (Summer’24–), algorithms for statistically-sound knowledge discovery

Alumni

-

Michelle Contreras Catalan’25 (Fall’22–Spring’25), algorithms for statistically-sound knowledge discovery

-

Sergei Leonov’27 (Summer’24–Spring’25), algorithms for statistically-sound knowledge discovery

-

Jordan Perry-Greene’24E (Spring’24–Fall’24), relational databases and websites for research groups and department libraries

-

Lisa Phan’27 (Summer’24), algorithms for statistically-sound knowledge discovery

-

Wendy Espinosa’25 (Spring’24), relational database for the Clotfelter Lab

-

Hena Ershadi’26 (Spring’24–Summer’24), FPGA acceleration for statistically-sound knowledge discovery (together with Lillian Pentecost)

-

Sriyash Singhania’26 (Summer’23–Spring’24), algorithms for statistically-sound knowledge discovery, relational database for the Follette Lab

-

Maryam Abuissa’24 (Spring’22–Spring’24), algorithms for statistically-sound knowledge discovery.

-

Daniel Flores-Garcia’24 (Spring’23–Spring’24), honors thesis on generating Afro-Cuban drum patterns with deep learning and data augmentation

-

Hailin (Angelica) Kim’24 (Spring’23–Spring’24), algorithms for statistically-sound knowledge discovery. Honors thesis on causal discovery

-

Ris Paulino’25 (Summer’23–Spring’24), a database for a museum

-

Hewan Worku’25 (Summer’23–Spring’24), algorithms for statistically-sound knowledge discovery

-

Sarah Wu (Summer’23–Spring’24), a database for a museum

-

Wanting (Sherry) Jiang’25 (Spring’23-Fall’23), database design for educational surveys, and algorithms for statistically-sound knowledge discovery.

-

Sarah Park’23 (Summer’22–Spring’23), honors thesis (cum laude) on higher-power methods for statistically-significant patterns.

-

Stefan Walzer-Goldfeld’23 (Fall’20–Spring’23), honors thesis (magna cum laude, 2023 Computer Science Prize) on null models for rich data, null models for sequence datasets, scalable algorithms for cube sampling.

-

Dhyey Mavani’25 (Fall’22), efficient implementations of algorithms for significant pattern mining.

-

Steedman Jenkins’23 (Spring’21–Fall’22), mining statistically-significant patterns.

-

Shengdi Lin’23E (Spring’22–Fall’22), honors thesis (cum laude) on rapidly-converging MCMC methods for statistically-significant patterns.

-

Adam Gibbs’22 (Fall’21–Spring’22), parallel/distributed subgraph matching with security applications.

-

Alexander Lee‘22 (Fall’20–Spring’22), scalable algorithms for cube sampling, statistical evaluation of data mining results. Honors thesis (Summa cum Laude), 2022 Computer Science Prize.

-

Vaibhav Shah’23 (Fall’21), teaching materials for the COSC-254 Data Mining course.

-

Maryam Abuissa’24 (Summer’21), teaching materials for the COSC-111 Introduction to Computer Science 1 course.

-

Holden Lee’22 (Spring’20–Spring’21), sampling-based algorithms for frequent subgraph mining.

-

Isaac Caruso‘21 (Fall’20–Spring’21), honors thesis on “Modeling Biological Species Presence with Gaussian Processes”, cum laude.

-

Conrad Kuklinsky’21 (Spring’19–Fall’20), VC-dimension for intersections of half-spaces.

-

Margaret Drew’22 (Summer’20), assignments and videos for the COSC-111 Introduction to Computer Science 1 course.

-

Kathleen Isenegger’20 (Fall’19–Spring’20), honors thesis (magna cum laude) on “Approximate Mining of High-Utility Itemsets through Sampling”.

-

Chloe Wohlgemuth’22 (Fall’20–Spring’22), sampling-based algorithms for centrality approximations in graphs.

-

Shukry Zablah’20 (Fall’19–Spring’20), honors thesis (magna cum laude, 2020 Computer Science Prize) on “A Parallel Algorithm for Balanced Sampling”.

How to join

If you are an Amherst student, and are interested in mixing data, computer science, probability, and statistics, please contact Matteo. With rare exceptions, you should have taken or be enrolled in COSC-211 Data Structures. Having taken one or more courses in probability and/or statistics (e.g., STAT-135, COSC-223, MATH/STAT-360) is a plus, but not necessary, although if you haven’t, it is likely that you will get to spend a semester learning about probability in computer science, possibly in an independent study course with Matteo. Here’s an interview with a former Data Mammoth about what the experience could look like.

🦣 💜 💾 We love data!

-

That’s the reason for the

*inData*, asX*means “everything X”, in computer science jargon. ↩